Open Dataset

songKitamura

songKitamura is an artificial music dataset for evaluation of audio source separation techniques. This dataset is available only for the purpose of academic research. When you publish papers using this dataset, please cite the following manuscript that describes the details of this dataset:

- Daichi Kitamura, Hiroshi Saruwatari, Hirokazu Kameoka, Yu Takahashi, Kazunobu Kondo, and Satoshi Nakamura, "Multichannel signal separation combining directional clustering and nonnegative matrix factorization with spectrogram restoration," IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 23, no. 4, pp. 654–669, April 2015.

In addition, please cite URL of this web page.

DownloadThe explanation of the dataset is below:

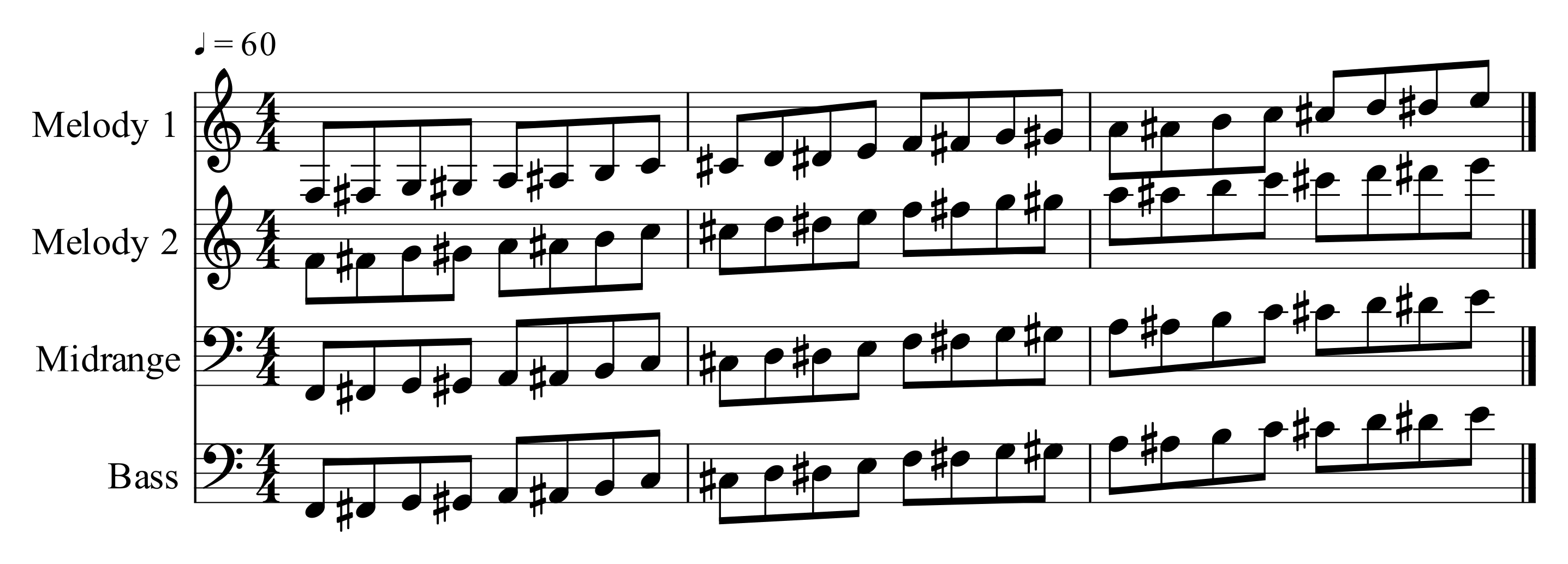

Four melody parts

songKitamura consists of four melody parts: Melody 1 and Melody 2 parts (high-range), Midrange part, and Bass part (low-range).

11 musical instruments

- Melody 1

- Oboe, Trumpet, Horn

- Melody 2

- Flute, Violin, Clarinet

- Midrange

- Piano, Harpsichord (a.k.a Cembalo)

- Bass

- Trombone, Fagot (a.k.a Bassoon), Violin Cello

Four melody parts are played with the 11 instruments described above.

Three MIDI sound softwares

- MGS

- Microsoft GS Wavetable Synth

- YMH

- YAMAHA MU-1000

- GPO

- Garritan Personal Orchestra 4

Each instruments are played with the above three MIDI sound softwares. MGS has the simplest timbre, GPO has the most realistic timbre, and YMH is an intermediate of them.

Two-octave ascending scales

As the sample audio signals, two-octave ascending scale sounds of each musical instrument are included (only for YMH and GPO), where the scale sound covers the corresponding melody part.